Taking an average of specific Quarters, like all Q1 or all Q2 over many years

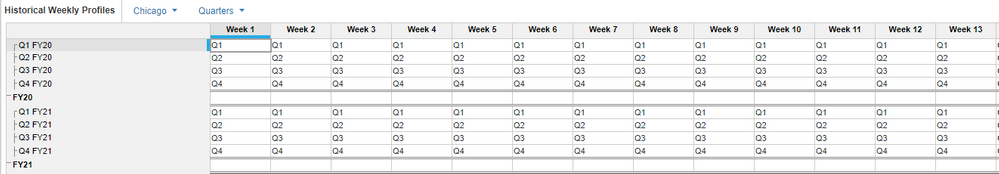

Hi, I'm attempting to generate some historical trends to drive future projections. In doing so, I have % Weekly over a 13-week period (one quarter), and I have this over many quarters. I'm trying to capture an average of all Q1 % weekly values (such as FY18 Q1, FY19 Q1, FY20 Q1, etc.), to predict a likely quarter projection by week for Q1 weeks.

This Historical module has the raw data (first snapshot), and each quarter has it's designated quarter value (second snapshot):

On another module, I'm attempting to capture all Q1 values using TIMESUM, but I don't know how to specify "Q1" explicitly:

Both tables have "Quarter" as a list and a dimension, but because the Historical module has Time, and Weekly Profiles module does not, I'm unable to do a simple SUM/LOOKUP to get my data. I'm having to try the TIMESUM to overcome this, but now I'm unclear as to how to only pull Q1 values. (I'm only reflecting FY20, but I have FY19 and FY18 I would be adding, and using those 3 years to average out Q1).

In both modules, the Week columns are a List and a dimension, not a Time Range.

Any direction would be much appreciated.

Thank you!

Best Answer

-

Hi Chris, thank you - I removed time from the Historical module and setup a Quarter FY list of just the time periods I needed. This allowed me to put everything together, similar to what you had!

Here's my final setup, where the time values on the rows are a list, not time:

So after removing the time dimension, I created a list "Time List: Quarter FY", and broke it into FY and Quarter.

In the summary module, I simply do an average based on the Quarter:

Right now I only have 1 year of data, so the "average" function isn't doing much, but hopefully once I get more data this will still hold true.

Thanks again!

1

Answers

-

I think you need to assign the % Profile in the historical module to four different line items, one for each generic quarter, by checking whether the Quarters line item is equal to Q1, Q2, Q3, or Q4. You also need to create four separate count line items with value of 1 if the Quarters matches with the line item.

In the Weekly Profiles module, you can divide the sum of % profile with the count by either selecting Time.All Periods if Total of All Periods is enabled in the Time Settings or using TIMESUM if it is not.

Regards,

Andre

1 -

@andre.lie @CommunityMember123764

Please do not use TimeSum when the target (the line item containing timesum) is dimensionalized by Time (Time in the Applies To) as this is a huge performance hit. Instead, consider using MovingSum().

Rob

3 -

Thanks for the hint @rob_marshall !

0 -

Hi,

I can offer another solution, not the cleanest design but it seems to get the job done.

What I did is to first move away from real time with a Fake Time list (using LOOKUP and a simple mapping table which can be automated via formula).

Then I aggregated the % based on their respective quarter (this is using a genereic Quarter list not time dimension).

INPUT:

SOLUTION:

SPEC:

@rob_marshall is there a reason why we can't move away from the time dimension in one simple aggregation? (e.g. with SUM on a list formated line item)

1 -

Absolutely, very happy to and this is a question we get a lot. TimeSum is not meant to be used when the target (where you are doing the timesum) has Time in the Applies To

Now, that doesn't mean it doesn't work because it does, but it adds additional overhead that is not needed. Check out this video @MarkWarren and I put together last November.

https://www.youtube.com/watch?v=PvimWuX5jVw

Hope the helps

1 -

Hi Rob,

Yes I had seen the video, very interesting.

However that wasn't my question. In this particular case the target is not dimensioned by time but Fake Quarters (Q1, Q2, Q3, Q4 so not linked to any specific year). The problem would be the same with any other list really.

You can't seem to be able to remove the time dimension and aggregate onto another list (not part of the source 'Applies to' section) in one go...either that has changed recently or I had never noticed it before.

Cheers,

Douglas1 -

I do not believe you need to use TIMESUM or MOVINGSUM.

My suggestion is that you use a combination of AVERAGE summary option, ALL TIME PERIODS and SELECT to pull through the correct data into your target module.

- Firstly, enable 'Total of All Time Periods,' in your model time settings

- The update the summary settings for the % profile line item to, 'Average.'

- In your target module reference the '% Profile,' line item in the source and use the following select function =Historical Weekly Profiles.% Profile[Select:Time.All Periods]

This will pull through the 'All Periods,' summary in the source and as the summary settings for this line item are set to, 'Average,' the average will be shown against the relevant week and quarter. Both quarter and week in this final module are lists NOT time settings so when you use this data in downstream modelling you will need to utilise the mapping present in the the other line item in the source shown in your query.

Good luck,

Chris

1 -

Hi Chris,

This was a good idea however I believe what the user is after here are averages by quarter (e.g. Q1 2020 and Q1 2021 for week 1) and not for all quarters at once like you are suggesting.

The summary method set to AVERAGE gets you the average for the year, or all periods, for every quarters combined.

In your example, the Q1 average should be 2% for week 1 (average of 1% and 3%) and for Q2 week 1 should be 3.5% ( average of 3% and 4%). It shouldn't be the same value.1 -

Yes, you are right. Good spot.

The issue here is removing the time dimension and restating the numbers in terms of the Quarters list.

You can achieve this by mapping your weeks and quarters lists into a relevant quarter time dimension.

Using this you can map the source into a module where the time dimension has is set to year. Set this line item summary option to average and use select to reference All Time Periods to remove the year time.

Chris

1 -

Sorry, I had misunderstood your question, I thought it was specifically why you shouldn't use TimeSum() when the target used Time in the Applies To. If your target does not use Time, then sure. But, from the looks of your pictures, you might be coming from an Essbase background, if my memory serves, where you split the weeks from the years into different dimensions. Can you do that with Anaplan, yes, but the only use case I can think of doing that is for reporting purposes. As for the moving from the Time dimension to a fake time dimension, you can do that, use your mapping and then do a lookup instead of a sum (if I am understanding your question).

Rob

1 -

Just realised that you do not need to include the weeks list in the mapping module.

This reduces the admin load on manually maintaining the mapping.It is possible to build a number of text logic formulas combined with FINDITEM to automate this mapping if required.

2

2 -

Finditem(Time,Name(item(Fake Q List))&" "&name(item(time)))

2 -

Thanks for this James, the formula actually helped me really understand the usage of the basics of finditem, name, and item. I wasn't able to make it work the way this is now, since I think the formula assumed I needed time in both modules, but nonetheless the direction helped me find a solution, which I will post soon. Thank you!

0