Anaplan Drinks from Azure Data Lake

Anaplan uses a proprietary storage system that is only accessible via APIs. For primarily Azure data environments, this can present some challenges to keeping fresh data flowing into Anaplan. However, we’ve found a way to accomplish this in an easy and reliable way as part of the common Azure big data architectural style, coupled with a cloud-hosted interface to Anaplan’s REST API.

Anaplan uses a proprietary storage system that is only accessible via APIs. For primarily Azure data environments, this can present some challenges to keeping fresh data flowing into Anaplan. However, we’ve found a way to accomplish this in an easy and reliable way as part of the common Azure big data architectural style, coupled with a cloud-hosted interface to Anaplan’s REST API.

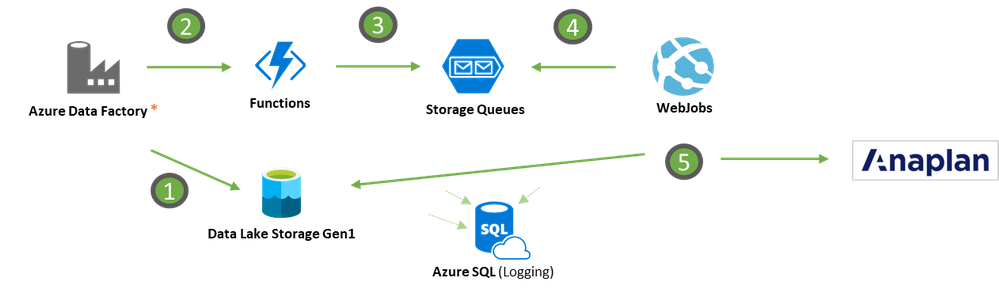

Solution Architecture

The central pieces of the architecture are Azure Data Lake Store (ADLS), and Azure Data Factory (ADF).

ADLS offers cheap, abundant storage that is easily accessible by other Azure and non-Azure services. ADF provides easy data extraction and simple job orchestration—arguably all you should ever need. For on-premises sources, ADF also offers a free, almost zero-configuration Integration Runtime that can run extract jobs on-prem and push data into the data lake.

For transformations and delta processing, we used Databricks because it can easily process files in ADLS in-place, without copying to yet another location (such as SQL). Python is simple to use and powerful, and the service scales up and down nicely. Compute costs are $0 when not in use.

Cloud-Hosted API Client

The key piece of our solution is a custom API that abstracts and simplifies the interface with the Anaplan v1.3 REST API. This was necessary for more than a few reasons:

- Uploading and downloading files requires splitting files into 25MB chunks (recommended size).

- Processing in Anaplan is asynchronous and must be monitored via polling for completion.

- File and process names need to be translated to internal id’s used in subsequent calls.

- Operations sometimes fail and must be re-run (e.g. network issues while uploading file chunks).

- ADF is not well-suited for making and orchestrating many interrelated API calls.

- Long-running processes in Anaplan cause the /files and /processes API endpoints to be locked.

The design of an Anaplan API Client follows a standard web-queue-worker architecture, which addresses these and many other more detailed concerns. It is implemented as follows:

- ADF copies data from sources to ADLS.

- If successful, ADF then calls an Azure Function to request an upload + import to Anaplan.

- The Azure Function adds the request to a queue, which processes requests sequentially.

- A WebJob watches the queue for work, removing the first available item in the queue.

- A WebJob performs the work, which involves calling the Anaplan APIs to upload and import data.

Overall, we found this method very reliable, passing many of our positive and negative testing scenarios.

Power BI Pipeline Dashboard

To monitor the overall progress of the ADF pipelines and the activities of the custom API client, a Power BI report was made with Direct Query support so that it represents in real-time the current status of all pipelines:

Also, to monitor data quality metrics, the following report was created. Using this, trends can be determined and watched for individual pipelines.

Conclusion

We set out to integrate Azure with Anaplan. We succeeded, but that success would be short-lived without also providing a modern data engineering platform, and development patterns that would respond well to future needs and growth. The solution here achieves a nice balance of usability and maintainability from a development perspective, reliability from an operational standpoint, and makes it easy for Anaplan users to take clean, fresh data for granted.

About the Author

Keno Granger is a Data & Analytics consultant at Slalom Consulting. He is a PM, Architect, and Agilist who loves to help enable his teams to solve business intelligence problems while having fun, being effective, and reducing the stuff that sucks. He has worked for over 15 years in the local Seattle consulting market with everyone from small start‑ups to large companies and non-profits.

Keno Granger is a Data & Analytics consultant at Slalom Consulting. He is a PM, Architect, and Agilist who loves to help enable his teams to solve business intelligence problems while having fun, being effective, and reducing the stuff that sucks. He has worked for over 15 years in the local Seattle consulting market with everyone from small start‑ups to large companies and non-profits.

Connect with me: LinkedIn

Comments

-

Cool case! Thank you for sharing it!

0