Hi All,

I converted this date format into period using this formular

PERIOD(DATE(VALUE(RIGHT(Reporting Period, 4)), VALUE(LEFT(Reporting Period, 2)), 1))

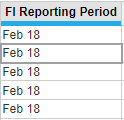

How can this date format be converted to period in Anaplan just like above. It doesn't work with the formula above. Please is there a workaround on converting this to period format.