Best Of

Re: Level 2 - Sprint: Activity import Data into DAT03 Historic Volumes Module

In case anyone stumbles on this page and has the same issue as nfernandez, it's just Match Names.

Kabir

Kabir

Re: Convert list to text

Have you tried using the NAME Function?

https://community.anaplan.com/anapedia/calculation-functions/name

This should allow you to switch the value from List to Text.

-Chris

ChrisMullen

ChrisMullen

How I Built It: Codebreaker game in Anaplan

Author: Chris Stauffer is the Director, Platform Adoption Specialists at Anaplan.

My kids and I enjoy code-breaking games, and so I wondered if I could build one in Anaplan. Over the years, there have been many versions of code breaker games: Cows & Bulls, Mastermind, etc.

The object of this two-player game is to solve your opponent’s code in fewer turns than it takes your opponent to solve your code. A code maker sets the code and a code breaker tries to determine the code based on responses from the code maker.

I came up with a basic working version last year during an Anaplan fun build challenge. Here's a short demo:

If you would like to build it yourself, the instructions on how the game works and how to build it are below.

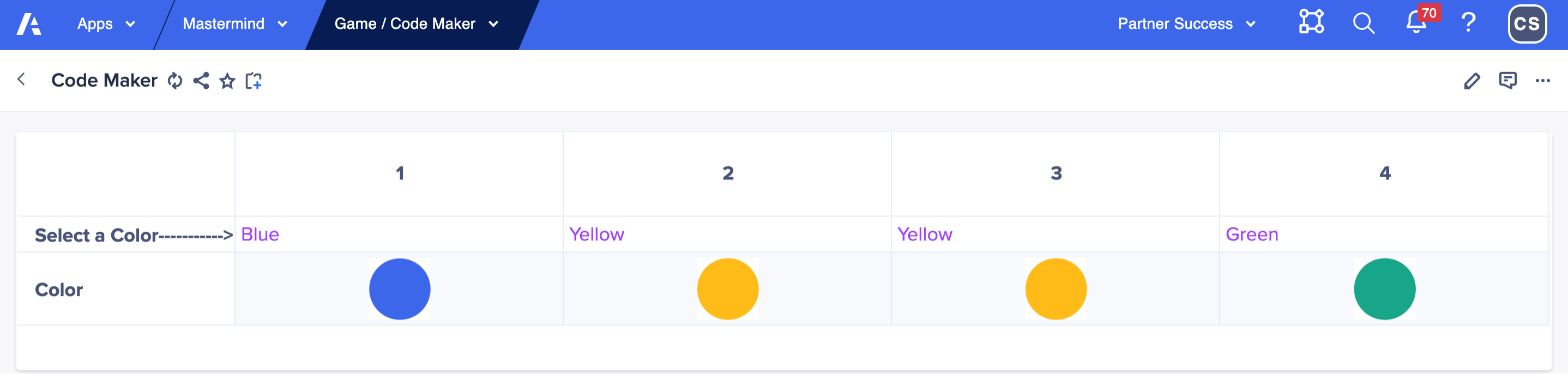

Game start: Code maker input

Player 1 code maker starts the game by going to the code maker page and choosing a secret four color code sequence using a grid drop down list. Code makers can use any combination of colors, including using two or more of the same color. You could set up model role and restricting access to this page using page settings to ensure the codebreaker cannot see this page.

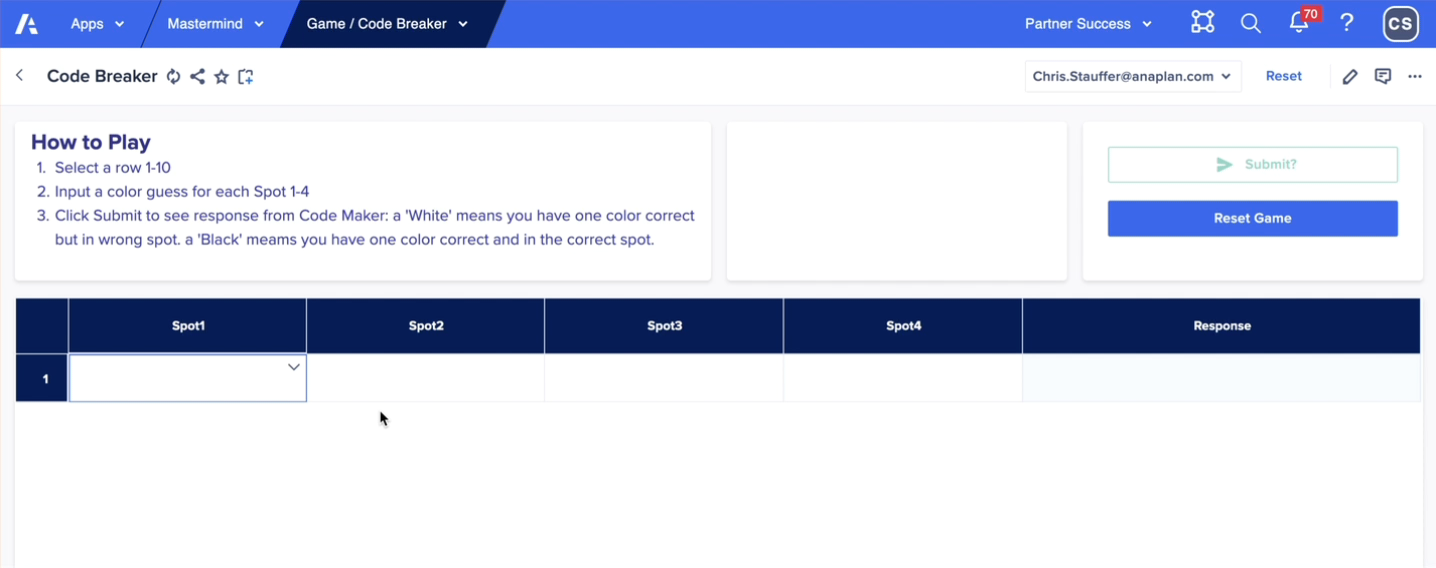

Player 2 code breaker input

The code breaker chooses four colors in the first row attempting to duplicate the exact colors and positions of the secret code.

The code breaker simply clicks the first row in the grid, uses a list drop down to make color guesses and then clicks the Submit? button. A data write action writes a true boolean into the code breaker module to turn on the module logic checking, turns on DCA to lock the row submission, and provides an automatic calculated code maker response. Unlike the real board game, the code maker does not have to think about the response nor drop those tiny black and white pins into the tiny holes in the board — the calc module does the work automatically and correctly every time! I’ve been guilty of not providing the correct response to a code breaker attempt which can upset the game and the code breaker.

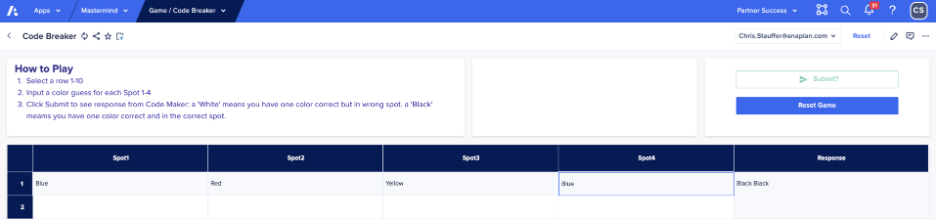

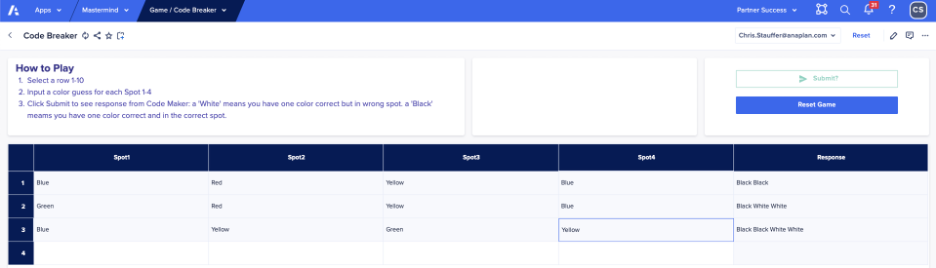

First guess

Below the code breaker selected blue-red-yellow-blue and clicked the Submit button. Since two guesses are the right colors AND in the right column position, the calculated response is “Black Black”, telling the code breaker that two guesses are in the correct position and are the correct color.

A black color indicates a codebreaker has positioned the correct color in the correct position. A white color indicates a codebreaker has positioned the correct color in an incorrect position. No response indicates a color was not used in the code.

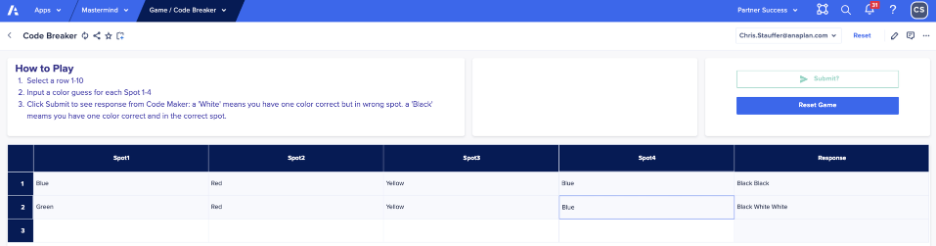

Second guess

In the second row, the code breaker input green in Spot 1, but kept red-yellow-blue and now only has one color in the correct position (yellow spot 3), but gained insight that there are now three correct colors with two in the wrong position thus the black-white-white response.

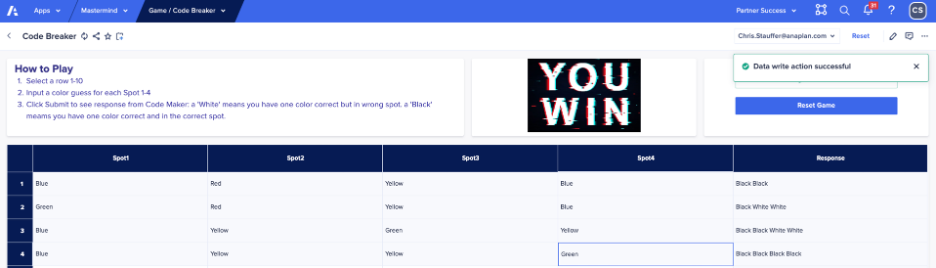

Third guess

The code breaker selected blue-yellow-green-yellow and now has all the correct colors with only two out of position.

Fourth guess

You have to be a little lucky to break the code on the fourth guess, but hopefully by now you get the idea of how the game works in Anaplan.

The winner of the game is the player that solves the code in fewer guesses than the other player, so each player takes a turn as code maker and code breaker.

Conclusion

The model uses a lot of conditional formulas, text functions (&, MID, ISBLANK, FIND), and custom matching logic, to identify right color and wrong position matches (white) and right color right position matches (black). A very simple couple of UX pages makes it easy to allow for two player game. You’ll have to create the model roles for code breaker and code maker and set up the page security settings.

Attached is the line item export in case you are curious or want to build it yourself. There are probably multiple ways to build the logic or make the formulas more elegant, but it was for me a fun diversion.

Enjoy!

Synchronize Export Action based on UI Context Selector

Often times when we publish explicit Export Action as Button in dashboard, either we could configure as Full export (Tabular single or Multiple) or we will need to provide boolean check box, to identify which context they are trying to export

How often is this impacting your users?

Frequent impact to Users while we create Export buttons. As most of our grids are multi dimensioned, and we will need to provide dynamic export actions, not full data. This only works when the grid has selective access control, but if not then boolean checkbox is the only solution

Who is this impacting?

This impacts both Model builders and UX capability. Not all users can be easily trained to export from anaplan inherent export feature.

Ideal solution is to enable synchronisation to Export action. This way users can select the context in UI and click the button to export data.

vickysvs

vickysvs

Re: Accessible by design: Our new accommodations for certification exams

Hi

I have completed the recertification exam and Yes! Additional Resources such as planual, Community and Anapedia are available to use even at Kryterion test center

Thanks

Pujitha

PujithaB

PujithaB

Retain the column sizes for 'My Pages'

Issue: When building dashboards for users, we ensure column widths are consistent across all columns. However, users often filter data and adjust column widths based on their specific needs. They can save these adjustments in a Personal Copy, but upon re-login, the column widths are reset, requiring them to manually resize columns again—leading to wasted time and frustration.

Suggestion: It would be highly beneficial if My Page retained user-defined column widths in their Personal Copy, ensuring a smoother and more efficient user experience.

End user documentation made easy with Anaplan

Author: Hillary Sich is a Certified Master Anaplanner and Senior Manager at Accenture.

Do you actually enjoy creating end user documentation for the models we build? Are you creating end user documentation as a Word document with multiple screen shots per page? If so, then this article is NOT for you!

Why not leverage Anaplan for end user documentation?

Pros:

- Never outdated

- Faster to create than word docs with screen shots

- Minimal model building

- Minimal impact on model size

Cons:

- Be aware of Report Manager display limitations

- Must be built within the Model where the App pages live if you want to be able to link pages

- Forces detail instructions on App Pages

Not convinced? I am not offended!

Here is what you need to build the documentation:

Three lists

- Pages (Create a list of pages to document)

- Activities (What can be done on the page)

- Topics (What to include on the documentation page such as Role, Purpose, etc)

Six modules

- Three properties modules, one for each list

- Topics x Page

- Activities x Page

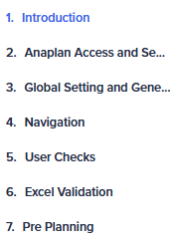

Three UX Pages

- Page details

- User documentation set up

- Report Manager as output

Still interested?

Here are the Blueprints…

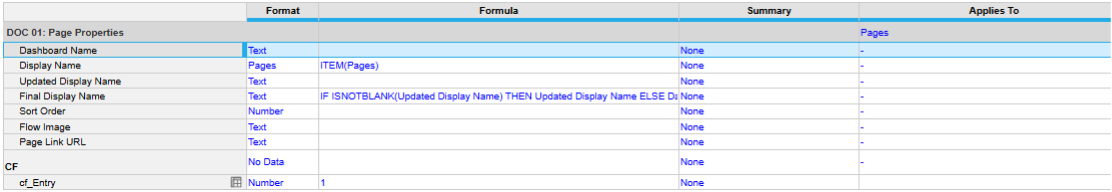

DOC01: Page Properties

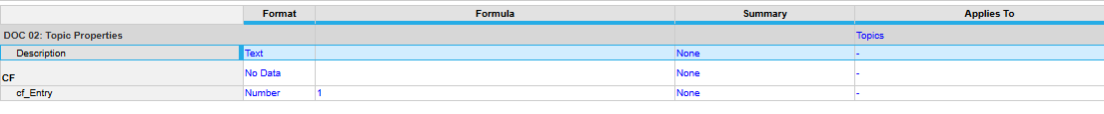

DOC02: Topic Properties

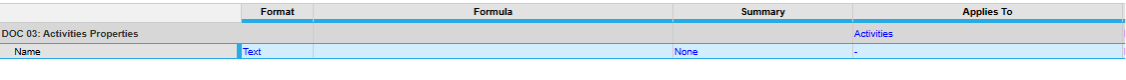

DOC03: Activities Properties

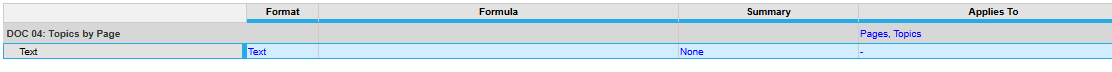

DOC04: Topics by Page

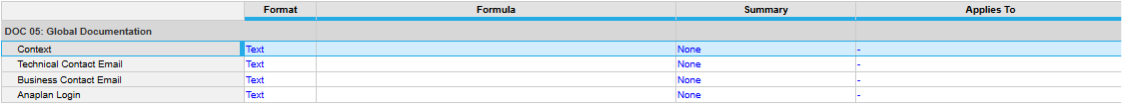

DOC05: Global Documentation

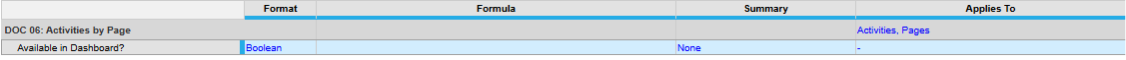

DOC06: Activities by Page (Used only for filtering in UX)

What is that? About 15 minutes of build time? Totally worth it!

Use your imagination when creating the three UX pages (no screenshots here).

UX 1: Page details: More of an end user page to input details to include in the output Page. Include links to model flows, Page links, Text for Purpose and activities, etc.

UX 2: User documentation setup: More of an Admin page to maintain lists.

UX 3: Main output of all of your hard work. Here is a short lists of slides that could be included:

Slides 1-5 are examples of global information.

Slide 7 is used as a landing page with links to pages by App category

.

Slide example dimensioned by Page:

End user documentation is an important and valuable deliverable for all clients. Leveraging Anaplan to produce maintainable, professional documentation is now easier than ever!

What tips would you add? Leave a comment!

Re: Bulk Copy Action for Production Lists

Coming back to re-up this. This is a critical functionality to give business users true control over their models. Anaplan where is the update? This was called out as coming on one of the upcoming releases but we have yet to see it.

jepley

jepley

Re: Share your favorite 2025 Anaplan features — November Community Challenge

Thank you for introducing new Anaplan features this year.

These updates have made the platform more powerful and user-friendly, and I appreciate the continuous innovation.

My Top 3 Favourite Features for year 2025 are as follows:

1.Anaplan Module Beta Feature:

You can now sort and filter modules by attributes (like functional area, time scale, versions) and edit which columns appear.

Show which UX Pages are linked to each module.

2. Combined Grids:

Combined Grids allow page builders to merge data from up to five different modules into a single, unified grid on a UX page, provided the modules share a common row axis. This solves a major challenge for planners who previously had to use multiple grid cards side-by-side.

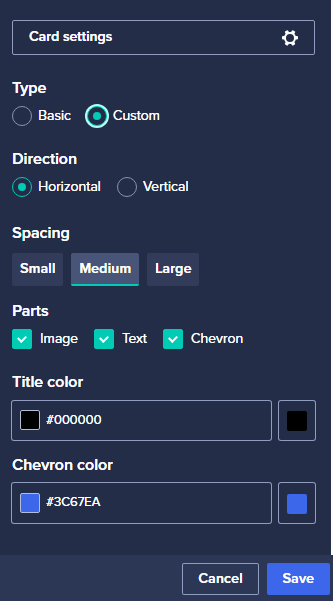

3. UX Card Template Enhancements:

You can now design card templates with specific formatting, layout, and structure—and reuse them across multiple UX pages.

This feature saves time and ensures consistency across dashboards. Being able to reuse templates across multiple apps and pages makes large-scale implementations much more efficient.

Re: Share your favorite 2025 Anaplan features — November Community Challenge

My Favorite Anaplan Features in 2025

1. Engine-specific content in Anapedia integration

The engine-specific content in Anapedia integration is one of the most useful enhancements for model builders. It allows you to access documentation, guidance, and examples that are tailored to the specific calculation engine you are working with—whether it's the Classic Engine or the Polaris Engine. Instead of searching through general documentation, you instantly get content that matches the engine’s behavior, constraints, and optimization patterns.

This makes it easier to:

- Understand how formulas behave differently between engines

- Follow best practices that are specific to Classic or Polaris

- Avoid performance issues by using the right engine-optimized techniques

2. Combined grids for Multi-Module Reporting.

Combined grids give page builders the ability to combine up to five modules onto a single grid view on a UX page. Model builders can now create a combined grid by selecting Add Grid Sections in the view designer. they can

Analyze data and its properties in a single view.

Apply filters and sorts across modules in a combined grid for more flexible analysis.

3. Role-based page access

This feature allows model builders to assign UX page visibility based on user roles, improving security and simplifying the user experience.

It helps you:

Maintain strong security by preventing unauthorized page access

Simplify the user experience by hiding pages that are not required

Reduce confusion, especially for new or occasional users

Manage access easily across apps and pages from a single, centralized setting

Support clean governance as the model grows and more pages are added