Author: Taichi Amaya, Certified Master Anaplanner, and Financial Planning and Analysis Specialist at Pasona Group, Inc.

Reading time: approximately 5-6 minutes.

"We need more detail in our forecasts. Let's have each sales rep submit their numbers individually — that way, we'll be more accurate."

Sounds reasonable, right?

But here's what actually happens: Fifty sales reps, each second-guessing their pipeline, each hedging slightly on the conservative side. By the time these forecasts roll up, that collective caution becomes a systematic bias — one that no amount of detailed analysis can fix.

This isn't a hypothetical scenario. It's a pattern I've seen repeatedly in FP&A practice.

In this post, I'll challenge the "more detail = more accuracy" assumption and share a principle I've developed through years of FP&A practice: granularity = responsibility. You'll learn:

- Why coarser planning often produces statistically better forecasts

- How granularity amplifies bias in predictable ways

- A practical framework for determining the right level of detail

Actuals vs. plans: different purposes, different granularity

Let me be clear: I'm not arguing against detailed data in general.

For actuals and historical analysis, more granularity is almost always better:

- Enables deeper drill-down analysis

- Helps identify trends and anomalies early

- Supports advanced analytics and machine learning

But planning is fundamentally different. Planning involves human judgment, organizational accountability, and inherent uncertainty. And in this context, I've learned that intentionally choosing a coarser granularity than your actuals often leads to better outcomes.

Why? Three interconnected reasons.

Why coarser plans are more accurate

1. The law of large numbers: statistical stability through aggregation

The more granular your planning units, the more statistical noise you're trying to predict.

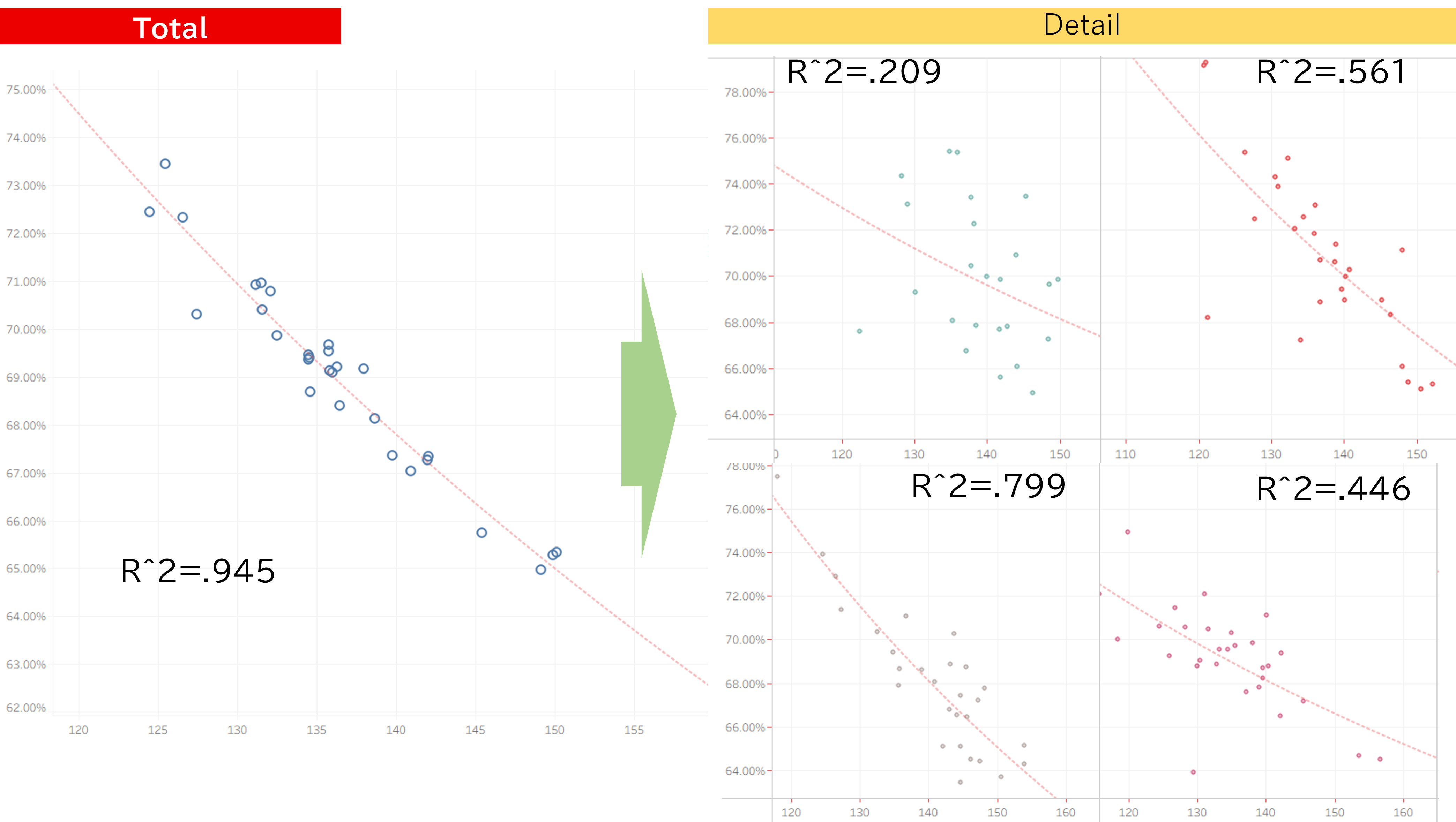

[Figure 1: The Law of Large Numbers in Action]

Figure 1 demonstrates this pattern using real data from our organization: at the Total level, outcomes consistently align with statistical models (R²=0.945). At the Detail level, predictability varies widely — some units maintain reasonable correlation (R²~0.80), but many show weak or unreliable patterns (R²=0.21-0.45).

Individual variations cancel out at higher levels of aggregation. This is precisely why driver-based planning — using ratios, trends, and relationships — works more reliably at coarser levels. The drivers themselves become more stable and predictable when applied to larger populations.

2. Bias accumulation: the "safety margin" effect

When you ask 50 people to forecast individually, each person makes a small, rational adjustment: "Better to be conservative — I don't want to miss my target."

Those individual safety margins don't stay individual. They compound.

When we consolidated input points — moving from individual contributors to team leads — the chronic conservative bias we'd been fighting largely disappeared. Not because team leads were better forecasters, but because there were fewer points where bias could accumulate.

While I don't have perfect before/after data to quantify this precisely, the pattern is consistent across organizations: more input points means more opportunities for systematic bias to creep in.

3. Information freshness: faster cycles, more relevant data

Even with Anaplan's powerful capabilities, our initial planning cycles were taking over a month. The bottleneck wasn't the tool, it was the hundreds of granular input points we'd designed into the process.

When we optimized granularity and reduced input points, we dramatically reduced input time: 2 weeks for Budget, 1 week for Forecast.

This isn't just about efficiency — it's about accuracy through timeliness.

A forecast based on week-old information is inherently more accurate than one based on month-old information. Market conditions change. Customer signals evolve. Competitive dynamics shift.

Fewer input points meant faster cycles. Faster cycles meant our plans could reflect current reality, not last month's reality. When market conditions change rapidly, this agility becomes a significant competitive advantage.

The granularity = responsibility principle

So how do you determine the right level of granularity?

My guiding principle: Plan at the level where accountability naturally sits.

If a Business Unit leader is responsible for BU performance, plan at the BU level — not by product, not by customer segment. If a Regional VP owns a region, that region should be your planning unit.

This alignment serves two purposes:

- Statistical: Matches the level where you have meaningful sample sizes and where biases are minimized.

- Organizational: Ensures the person inputting the plan can actually explain and defend the numbers.

Three questions to find the right granularity:

- Does the person have specific knowledge at this level?

If a sales manager is guessing at individual deal probability, the granularity is too fine. If they can speak credibly about team pipeline trends, that's the right level. - Can they explain the assumptions behind the number?

If the answer is "I just copied last year and adjusted by 5%," you're asking for too much detail. Good planning requires thoughtful assumptions — which requires appropriate scope. - Will decisions be made at this level?

If no one will ever look at "Product SKU 47829 in the Northeast," why are you planning it separately? Plan at the level where decisions actually happen.

When these three questions align with an organizational accountability level, you've found your optimal granularity.

In Anaplan terms: design your input modules at these accountability levels, not at the maximum granularity your data model can support. Let driver-based logic handle the detailed breakdowns for analysis — but keep human judgment at the level where it's most reliable.

Conclusion

Anaplan's flexibility allows us to design at any level of granularity, which makes choosing the right level even more critical.

Three interconnected forces — statistical stability, bias mitigation, and information freshness — all favor coarser planning granularity aligned with organizational accountability. I've seen this pattern hold across multiple implementations.

Apply the granularity = responsibility principle to your planning process. The improvements in forecast accuracy and planning agility are real and measurable.

I'd love to hear your experiences. Let's discuss in the comments below.

……………

About the Author:

With 13 years in FP&A and 9 years of hands-on Anaplan experience, Taichi Amaya has been a Master Anaplanner since 2019. He works on the customer side, designing and building enterprise-wide FP&A models and learning daily from the intersection of planning theory and business reality.