Best Of

Inspired by the best: Master Anaplanner insights to close out the year

Hello, Anaplan Community!

What an amazing year it’s been! As we look back on 2025, I am absolutely blown away by the spirit of collaboration and support that defines our community. Every single day, you all show up for each other, sharing knowledge, solving complex challenges, and pushing the boundaries of what’s possible.

This year, you collectively posted over 1,500 questions and provided an incredible 4,000+ answers! That represents thousands of moments of connection, learning, and progress. Thank you for making this community the vibrant, helpful, and indispensable resource that it is.

⭐️ Community Champions of 2025!

I want to give a special shout-out to some of our most active and helpful members this year. Your contributions have not gone unnoticed, and your willingness to jump in and help others is the bedrock of this community. A huge round of applause for a few of our top commenters: @devrathahuja @Dikshant @rob_marshall @seymatas1 @Tiffany.Rice @Prajjwal88 @andrewtye @SriNitya @logikalyan and @alexpavel.

🏆️ Perspectives from Anaplan Certified Master Anaplanners

One of my favorite parts of this community is learning from the best of the best. To celebrate the year, I asked five of our brilliant Certified Master Anaplanners to share their biggest wins from 2025 and what they’re aiming for in 2026.

I hope their stories inspire you as much as they’ve inspired me!

Junqi Xue, Certified Master Anaplanner and Solution Architect at valantic

For Junqi, 2025 was all about building a deeper, more versatile skill set and giving back to the community. Here's what he had to say:

"Here are my learnings from 2025:

Built my foundational knowledge:

a. Completed trainings on ADO, Polaris, Workflow, and tried finding their use cases in our projects and proposals across functions, e.g. finance and supply chain.

b. Attended the workshop for the Anaplan SC APP, getting to understand the capabilities of Anaplan Apps more in details, advocated and shared the knowledge with my internal colleagues.

Enhanced capability: I implemented two complicated use cases of Optimizer (production planning, resource allocation and planning), including the analysis of the results.

Community growth: Some interesting new functions were suggested and added to the valantic Anaplan extension this year, namely the visualization of the line items, list items, or anything, that is used as filter in saved views. Along with the visualization of actions/processed used on the UX. As a result, now the model clean up is even easier, especially solving the pain point of not being blind anymore."

Junqi's target for 2026: "Gather more hands-on experience on the Polaris engine."

Julie Ziemer, Business Solutions Architect at Royalty Pharma, LLC

Julie and her team drove a true transformation in how their organization approaches planning. In her own words:

"This past year has been a truly transitional step forward in our Anaplan journey, driven by myself and my team. What began as an effort to modernize financial modeling has evolved into a full-blown transformation in how we plan, connect data, and collaborate across departments.

We've successfully built and streamlined the foundation of our models, providing a clearer, real-time view of performance and forecasts. This means significantly fewer spreadsheets (win!) and far more confidence in our numbers. Along the way, we rigorously tested, refined our logic, and constantly reminded ourselves that “version control” is not a lifestyle choice, but a life-saving best practice. The progress hasn’t just been about automation; it’s been about building a sustainable framework that enables us to work smarter, not harder.

Looking ahead, our team's focus is on expanding Anaplan’s role in financial reporting, bringing greater automation, transparency, and storytelling to our data. To ensure long-term success, we’re laying the groundwork for a Center of Excellence (CoE) to help our models, processes, and people thrive as a connected Anaplan community."

Julie's goals for 2026:

- "To ensure 100% adoption of Anaplan by all finance team members, completely moving away from legacy systems to establish a single source of truth for planning and reporting.

- To lead the integration of Anaplan beyond the finance function into a new business area, such as research or HR planning, to foster true connected planning across the entire organization."

Wenwei Liu, Anaplan Systems Architect at Atlassian

Wenwei’s journey from consulting to an internal role gave her a powerful new perspective. She shares:

"Through my transition from Anaplan Consulting to the internal Anaplan team, I gained a fundamentally different perspective on how Anaplan supports business connected planning from the customer side. A key accomplishment was contributing to a large-scale model rebuild project that deepened my understanding of the platform's capabilities and identified critical customer needs. This shift from external consultant to internal team member positioned me to bridge consulting expertise with product strategy, uncovering pain points and opportunities that will drive more customer-centric solutions."

Wenwei's goal for next year: "Leverage emerging AI capabilities and new Anaplan features to enhance the connected planning experience for our users, while improving efficiency in model building and internal support processes."

Dmitry Sorokin, Senior Software Engineer at lululemon

Dmitry focused on the power of collaboration and technical excellence to elevate his company's Anaplan ecosystem. He reflects:

"2025 was a year of collaboration and learning. I focused on simplifying complex model logic to reduce calculation times and enhance the user experience across our Anaplan Connected Planning ecosystem. I also partnered with engineering to streamline integration pipelines, improving data refresh speed and overall reliability."

Dmitry's goal for 2026: "I’m excited to continue mentoring new model builders while expanding my architecture expertise. I plan to explore new ways to leverage AI in model building, automated testing, and planning workflows."

Ekaterina Garina, EPM Consultant at Keyrus

Ekaterina spent her year strengthening Connected Planning by bridging the gap between finance and procurement for her clients. She explains:

"This year, I worked closely with clients to strengthen their FP&A capabilities in Anaplan, helping leaders gain clearer insights and make more confident, data-driven decisions. A major highlight was delivering equipment-level variance analysis that improved visibility into cost drivers and simplified performance explanations for stakeholders. Procurement reporting was enhanced through the introduction of clear price and volume impact breakdowns, enabling a deeper understanding of spend movements. These initiatives strengthened Connected Planning by aligning finance and procurement around shared outcomes and consistent insights."

Ekaterina's goal for 2026: "I look forward to continuing to deliver scalable, high-impact solutions that help organizations plan faster and respond more effectively to change."

Now, it's your turn!

What an inspiring collection of achievements! Now, I want to hear from YOU.

What was your biggest win of 2025? What are you looking forward to tackling in 2026? Share your story in the comments below!

Happy planning,

Ginger Anderson

Sr. Manager, Community & Engagement Programs

Re: TEXTLIST() vs [TEXTLIST:]

Ankit,

The function TextList, no matter how it is used, is bad on performance. Why, because it relies on Text and Text is evil due to the amount of "real" memory it uses. In the UX, text is approx 8 bytes, but in reality, it is (2 * the number of characters of the text string) + 48 bytes. So, the string ABC is really 54 bytes. And when you use TextList, those bytes start adding up very quickly. Take a look at the below, when you use text concatenation on 100 million cells

And that is only doing one concatenation, but with TextList, you can have multiple, just adding to it every character at a time which then blows the performance out.

Does this help?

Please let me know.

Rob

How I Built It: Codebreaker game in Anaplan

Author: Chris Stauffer is the Director, Platform Adoption Specialists at Anaplan.

My kids and I enjoy code-breaking games, and so I wondered if I could build one in Anaplan. Over the years, there have been many versions of code breaker games: Cows & Bulls, Mastermind, etc.

The object of this two-player game is to solve your opponent’s code in fewer turns than it takes your opponent to solve your code. A code maker sets the code and a code breaker tries to determine the code based on responses from the code maker.

I came up with a basic working version last year during an Anaplan fun build challenge. Here's a short demo:

If you would like to build it yourself, the instructions on how the game works and how to build it are below.

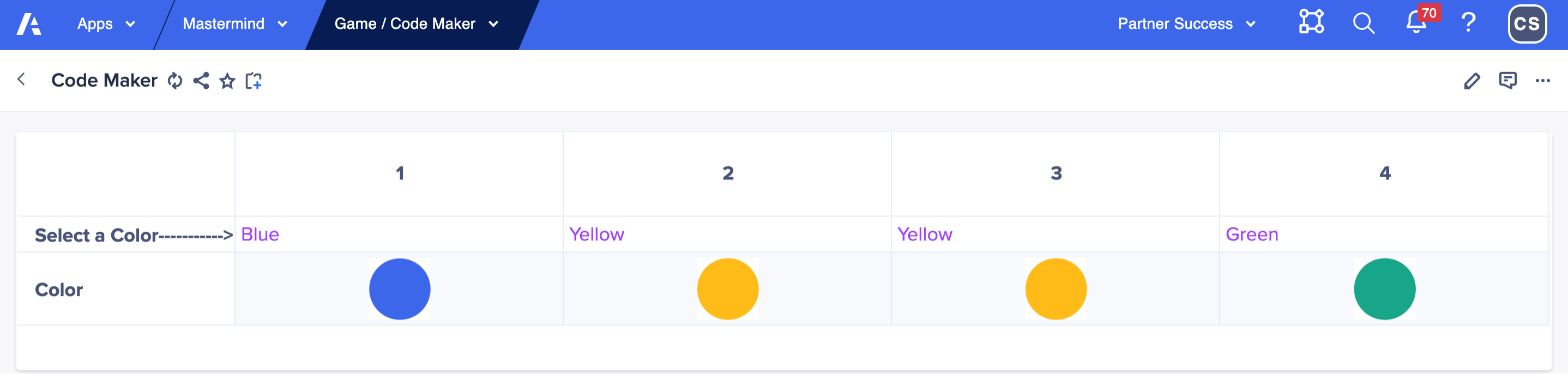

Game start: Code maker input

Player 1 code maker starts the game by going to the code maker page and choosing a secret four color code sequence using a grid drop down list. Code makers can use any combination of colors, including using two or more of the same color. You could set up model role and restricting access to this page using page settings to ensure the codebreaker cannot see this page.

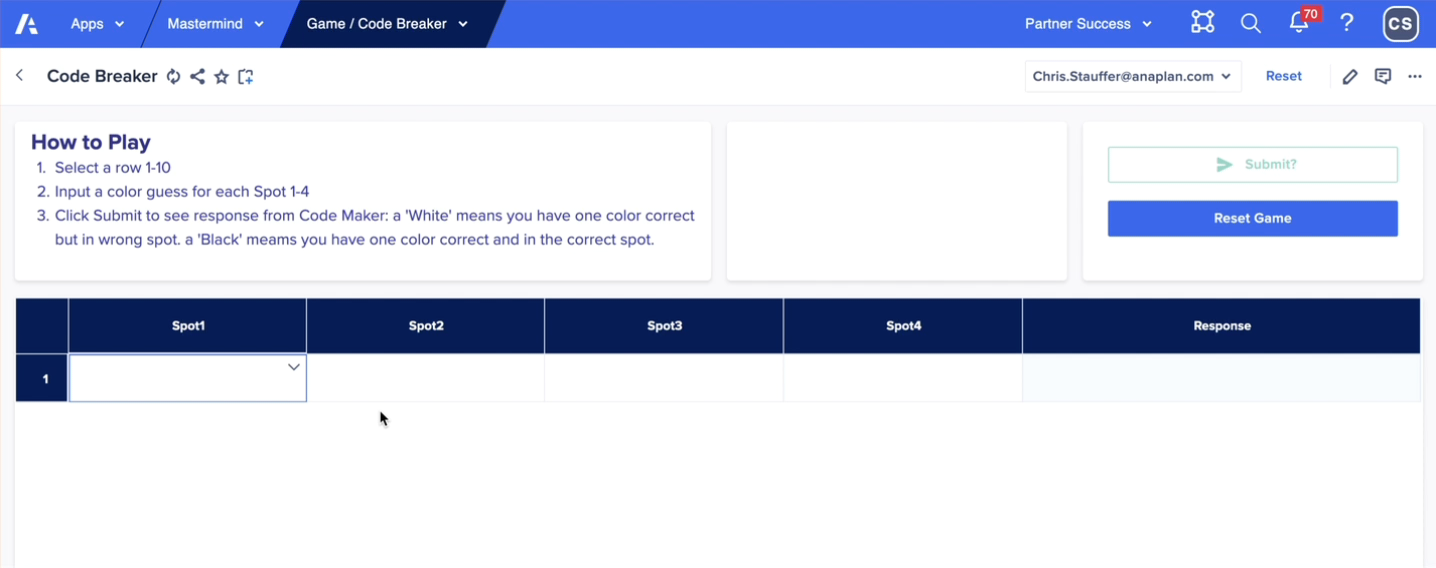

Player 2 code breaker input

The code breaker chooses four colors in the first row attempting to duplicate the exact colors and positions of the secret code.

The code breaker simply clicks the first row in the grid, uses a list drop down to make color guesses and then clicks the Submit? button. A data write action writes a true boolean into the code breaker module to turn on the module logic checking, turns on DCA to lock the row submission, and provides an automatic calculated code maker response. Unlike the real board game, the code maker does not have to think about the response nor drop those tiny black and white pins into the tiny holes in the board — the calc module does the work automatically and correctly every time! I’ve been guilty of not providing the correct response to a code breaker attempt which can upset the game and the code breaker.

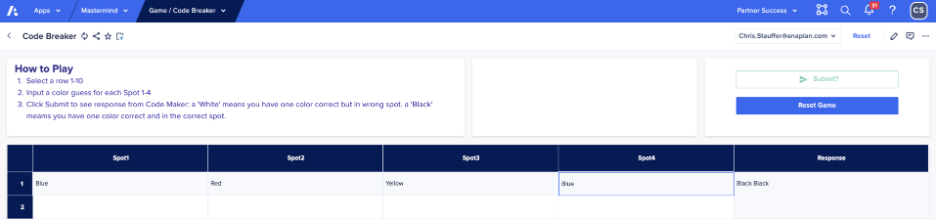

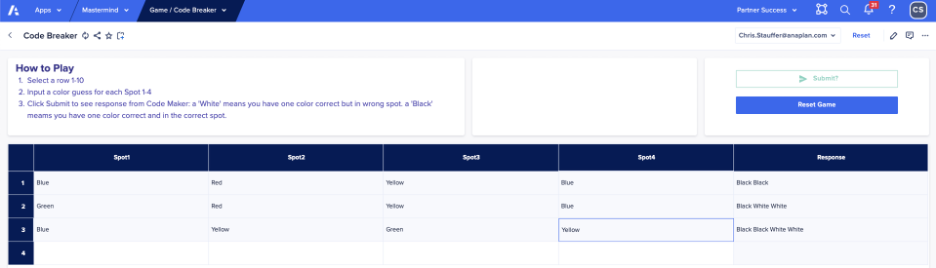

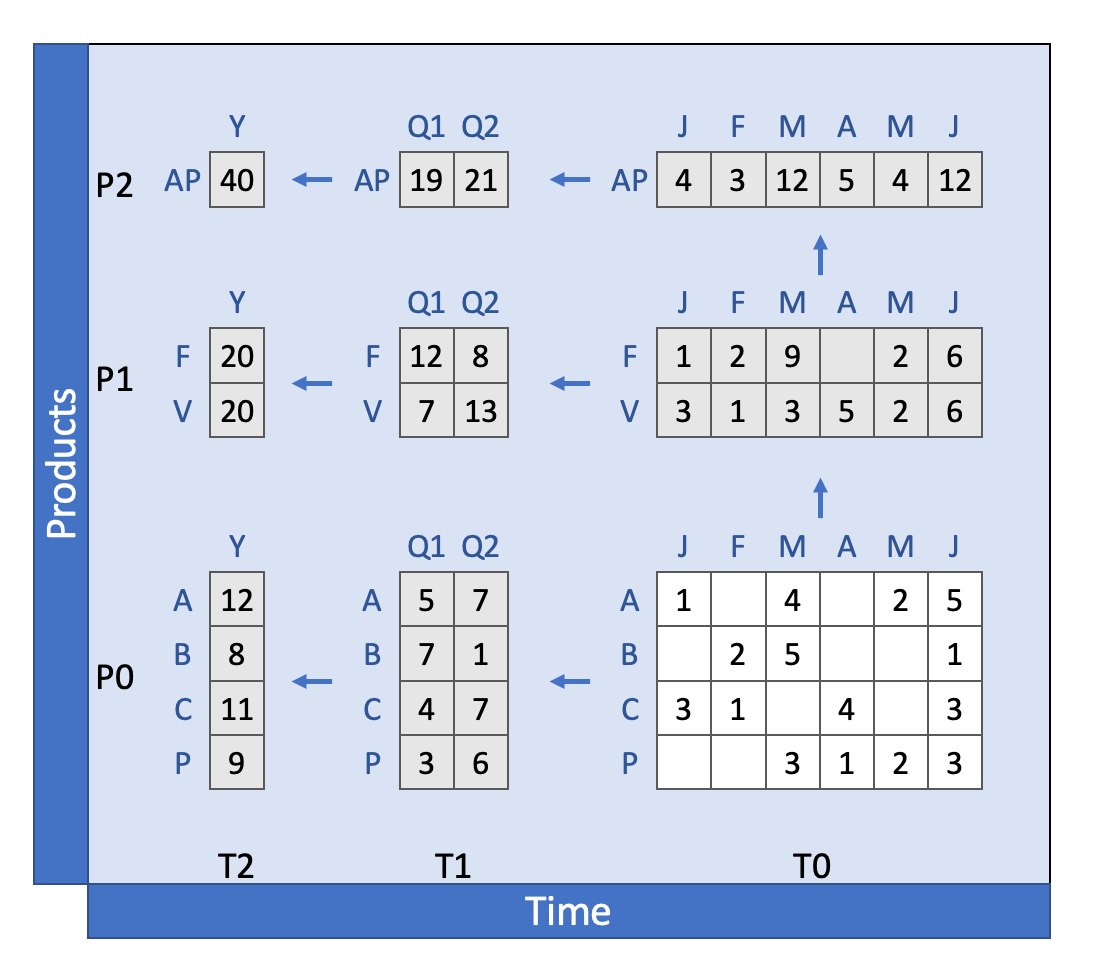

First guess

Below the code breaker selected blue-red-yellow-blue and clicked the Submit button. Since two guesses are the right colors AND in the right column position, the calculated response is “Black Black”, telling the code breaker that two guesses are in the correct position and are the correct color.

A black color indicates a codebreaker has positioned the correct color in the correct position. A white color indicates a codebreaker has positioned the correct color in an incorrect position. No response indicates a color was not used in the code.

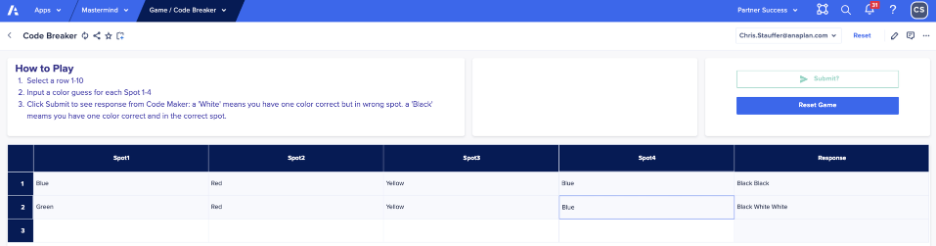

Second guess

In the second row, the code breaker input green in Spot 1, but kept red-yellow-blue and now only has one color in the correct position (yellow spot 3), but gained insight that there are now three correct colors with two in the wrong position thus the black-white-white response.

Third guess

The code breaker selected blue-yellow-green-yellow and now has all the correct colors with only two out of position.

Fourth guess

You have to be a little lucky to break the code on the fourth guess, but hopefully by now you get the idea of how the game works in Anaplan.

The winner of the game is the player that solves the code in fewer guesses than the other player, so each player takes a turn as code maker and code breaker.

Conclusion

The model uses a lot of conditional formulas, text functions (&, MID, ISBLANK, FIND), and custom matching logic, to identify right color and wrong position matches (white) and right color right position matches (black). A very simple couple of UX pages makes it easy to allow for two player game. You’ll have to create the model roles for code breaker and code maker and set up the page security settings.

Attached is the line item export in case you are curious or want to build it yourself. There are probably multiple ways to build the logic or make the formulas more elegant, but it was for me a fun diversion.

Enjoy!

Re: Accessible by design: Our new accommodations for certification exams

Hi

I have completed the recertification exam and Yes! Additional Resources such as planual, Community and Anapedia are available to use even at Kryterion test center

Thanks

Pujitha

PujithaB

PujithaB

On-Demand Calculation in Polaris

Author: Dave Smith is a Senior Product Manager at Anaplan.

We are thrilled to announce the rollout of On-Demand Calculation in Polaris, a feature that significantly enhances performance and efficiency for our users. On-Demand Calculation is designed to compute values only when they are needed, leading to a more optimized use of resources and faster data processing.

Key benefits

- Reduced latency: Only the necessary cells are recalculated when data changes, which means users experience faster updates and more responsive interactions.

- Lower memory usage: By calculating and storing only the required regions, the memory footprint is significantly reduced. This is especially beneficial for highly-dimensioned line items, where the potential aggregate space is vast.

- Improved performance: On-Demand Calculation ensures that data changes are processed more quickly, as unnecessary recalculations are avoided. This is particularly useful for line items with deep hierarchies and detailed time dimensions.

Real-world example

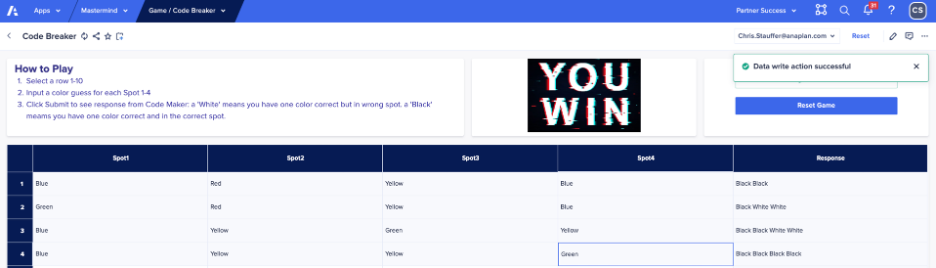

Consider a line item dimensioned by both Products and Time, where the Products dimension has a deep hierarchy (e.g., multiple levels of product categories) and the Time dimension spans several years with detailed monthly data. Without On-Demand calculation, all aggregates will be calculated at model-open time and on recalculation as below:

Image: A Line Item dimensioned by Products and Time with all values

calculated.

However, the reality is that in such scenarios, only a small fraction of the total possible cells are typically viewed by users. On-Demand Calculation ensures that only these necessary regions are calculated, reducing memory usage and speeding up data changes:

Image: The Line Item dimensioned by Products and Time whenn a User is looking at the ‘top of the house’ value.

How it works

On-Demand Calculation analyses the model on model-open, and marks certain aggregations for deferred calculation. Currently On-Demand Calculation applies to summary methods where the aggregation is the final step and is not referenced in a further calculation, with the exception of Formula and Ratio summary methods which are never deferred. Rather than calculating these regions up-front as was previously the case, these deferred regions will only be calculated as needed. This not only means that the model opens faster, as less calculation is required immediately, but also that writes to the model are made more quickly, as some recalculation is avoided on write.

As calculations are required on-demand, Polaris keeps the calculated regions in cache to improve the performance of future access. They remain in memory until either the upstream cell data changes, making the region invalid, or the model starts running low on memory and the region has not been accessed for a long time. In both cases, the calculated region is dropped and recalculated only if and when it is needed again for a user view – for example if the aggregation needs to be calculated for an export, or it is viewed in the modelling UX or enterprise UX pages.

Impact on users

- End users: Enjoy faster and more responsive data interactions, with reduced latency on data changes.

- Model builders: May notice fluctuations in memory and populated cell counts, reflecting the dynamic nature of On-Demand Calculation. It will also be important to follow best-practice with regards to testing the model on realistic, scaled data in UAT. However, the overall user experience will be greatly improved, and model open and formula recalculation times will be reduced.

We believe this update will significantly enhance the user experience and look forward to your feedback. Stay tuned for more updates and enhancements from Polaris!

Questions? Leave a comment!

davesmith

davesmith

Talent Builder Graduate Mini Resume - Snehitha Adicherla

Name: Snehitha Adicherla

Location: Ohio, United States

Certification: Professional Model Builder, Certified Anaplan Level 2 Model Building

Educational Background: M.S. in Computer Science, Wright State University (2023-2025)

B. Tech in Computer Science, VBIT (2018-2022)

Profile Summary: Analytics professional with experience in SQL, Python, ETL workflows, and Informatica. Achieved Anaplan Talent Builder Level 2 certification, gaining a strong understanding of planning models and Connected Planning. Eager to apply technical and analytical skills in business and planning-focused roles.

Target Roles:

Anaplan Model Builder

Business Analyst

Financial Analyst

Operations Analyst

Supply Chain System Analyst

Technical Support Analyst

Skills:

Anaplan Model Building

SQL

Python

ETL(Informatica)

FP&A

Power BI

Interview Availability: Available upon request

Start Date: Immediately

LinkedIn URL: www.linkedin.com/in/snehitha-adicherla-54ab6616b

Email: snehithaadicherla2001@gmail .com

#anaplantalentbuildergraduate #certifiedmodelbuilder #opentowork

Data integration best practices — Event recording available!

Last month, we hosted a Community Challenge inviting members to share their best practices on data integrations. We provided both beginner and advanced prompts to encourage members to discuss how they move data in and out of Anaplan, share tips for smooth integrations, and inspire others in the Community.

Yesterday, we hosted an event to highlight the responses we received from the Community Challenge. Jon Ferneau (@JonFerneau), Data Integration Principal, shared his expert insights, recognized participants for their contributions, and presented key takeaways for practitioners along with call-to-action items for our Community to consider.

During the event, Jon gave a shout out to our Community Challenge participants, including @andrewtye @matthewreed @SunnyNafraz @Prajjwal88!

Watch the recording

Timestamps:

- Welcome — 0:00

- Guest host introductions and overview — 1:40

- Community Challenge recap presentation — 3:47

- Q&A — 23:35

- Closing — 42:54

A PDF of the presentation is available here:

Resources for Data Integration Best Practices

- OEG Best Practice: Data Integration Decision App

- OEG Best Practice: Data Hubs: Purpose and Peak Performance

- Getting Started with Anaplan APIs: What you need to know

- [Start Here] Anaplan APIs in a Nutshell

- Understanding Errors and Rejected Lines When Importing Data Via APIs

Questions? Leave a comment!

becky.leung

becky.leung

October 2025 Spotlight: Community Boss Employees

This month, we’re highlighting the newest Community Boss Employees (CBEs) who joined the program in 2025! The Community Boss Employees program brings together top internal Anaplan experts who actively share their knowledge, support members, and elevate conversations across the Community.

Our CBEs bring fresh perspectives and deep expertise to the Community. They actively answer questions in forums, create valuable content, help shape Community Challenges, and present at events on specialized topics — strengthening collaboration and advancing collective knowledge across the Anaplan ecosystem

To help the Community get to know our new CBEs better, we asked each of them to choose one Community article they believe every member should read and share why it’s particularly impactful. Here is what they had to say!

Allison Slaught (@allison_slaught), Anaplan Intelligence Director – OEG

Why everyone should know this article: Combined grids is a transformative feature that improves the Anaplan user experience. Whether you’re building a new app or maintaining an existing one, this should be on your radar. As the name implies, it allows you to consolidate views from multiple modules into a single, unified grid.

Why I find it valuable: This feature delivers a powerful one-two punch: a more intuitive front-end and simplified back-end. I was able to experiment with it in early access, and the maintenance benefits alone were significant: fewer user filters to build and manage; less need for synchronized scrolling; redundant pages were consolidated or eliminated; and simplified training for new users due to intuitive design. It’s a worthwhile investment to incorporate into your roadmap to enhance existing applications and will quickly pay dividends in both user satisfaction and development time.

Elizabeth Schera (@ElizabethS), Director of Product Utilization & Adoption

End user triggering of workflow processes

Why everyone should know this article: I chose this article because it shows how you can connect Anaplan's modeling capabilities with the real-world business processes (workflow) they support. Even more than the math, workflows ARE the planning process. And, nearly every organization has processes — like launching new initiatives or approving headcount requests — that start with the planner and require structured approvals before being committed. This article demonstrates how to empower your end-users to initiate these actions directly within Anaplan, streamlining operations and ensuring governance is built right into the process.

Why it's valuable for you to read: Getting started on building your first Workflow can feel intimidating, but this guide provides a detailed, practical example covering both the model build and the workflow configuration from start to finish. This gives Workspace Administrators a concrete blueprint they can learn from and adapt to their own unique use cases. It’s the perfect starting point for anyone looking to demystify workflow and unlock its powerful capabilities to automate and control key business activities.

Etienne Waniart (@EtienneW), Principal Platform Adoption Specialist

Streamline hierarchy management with Polaris's new ITEMLEVEL and HIERARCHYLEVEL functions

Why everyone should know this article: Do you work with complex or irregular hierarchies in Anaplan? Then this article is a must-read! It introduces the new ITEMLEVEL and HIERARCHYLEVEL functions in the Polaris engine, which greatly simplifies hierarchy logic, especially helpful when working with ragged hierarchies without needing complicated workarounds.

Why it’s valuable for you to read: These functions make models cleaner, easier to maintain, and significantly more performant. If you design or manage hierarchical structures in Anaplan, this will save you time and help you streamline your logic while making your models more sustainable in the long term. No more need for workarounds using ratio summary methods!

Jan Sypkens (@jan_sypkens), Principal Platform Adoption Specialist

Jan recommended multiple Polaris articles.

Why everyone should read these articles and why it's valuable for you to check out: I recently rebuilt an airport capacity model in Polaris — a Classic model I originally developed in 2017. This was a personal challenge I set for myself to deepen my understanding of Polaris and ADO. In the process, I also discovered the added benefit of leveraging AI to generate artificial datasets that enhance the modeling construct.

Throughout the rebuild, several Anaplan Community articles proved particularly valuable to me, including:

- Anaplan Polaris – Understanding Blueprint Insights and Optimizing for Populated Space

- Anaplan Polaris – Blueprint Insights

- Anaplan Polaris – Populated Space

- Anaplan Polaris – Natural Dimensionality

- Unlocking the power of Polaris: A guide to efficient model building

- Iterative Development in Polaris (ALM)

- Moving a model from Classic to Polaris

Jon Ferneau (@JonFerneau), Data Integration Principal

OEG Best Practice: Imports and exports and their effects on model performance

Why everyone should know this article: Data integration is an integral part of all Anaplan modeling. Ensuring that you have the proper integrations setup can greatly improve your planning experience and build trust in the underlying data that drives your decision making. This best practice article highlights the most important concepts to consider when designing your integration strategy and is a must-read for all Anaplan model builders and IT teams alike.

Why it's valuable for you to read: If you focus on implementing these basic structures and ideas, you will have implemented efficient processes that limit model downtime for end users. Following data integration best practices also make it much easier for your organization to manage and troubleshoot potential data issues, while also making it quicker to build net new integration pipelines into your planning models to support use case growth.

Julien Froment (@julien_froment), Principal Platform Adoption Specialist

Guiding users through the UX best practices — Event recording available!

Why everyone should watch this recording: In the past, we’ve already shared best practices around UX. However, this event recording takes it further — it showcases excellent examples that can truly inspire UX design. To me, UX is not just about technical functionalities — it’s where process, storytelling, and features come together.

This video captures that perfectly, offering strong examples we can learn from and build upon. As Page Builders or end users, it’s worth taking the time to explore this video and see how you can leverage the full potential of the UX.

Why it's valuable for you to check out: It’s valuable because it brings UX to life — showing how thoughtful design can transform user experience. It’s a great source of inspiration for anyone looking to make their pages more intuitive and impactful. Thanks, @ElizabethS and @seb_mcmillan — this event recap is brilliant.

Seb McMillan (@seb_mcmillan), Principal Platform Adoption Specialist

Anaplan Polaris: A deeper dive into the Polaris Engine and model building techniques

Why everyone should know this article: No one should even think about building in Polaris without reading this first — the information here will help you save hours of work.

Why it’s valuable for you to read: Polaris has a number of key differences in implementation approach to Classic and you shouldn't just jump in and hope for the best. Best practice is evolving but this reflects our real world experience in working with the hundreds of models our customer have built.

Theresa Reid (@TheresaR), Architecture & Performance Director

Iterative Development in Polaris

Why everyone should read this article and why it's valuable for you to check out: If I had to choose one I would shamelessly plug my own article, Iterative Development in Polaris. I think this article is important for all Polaris model builders, as it outlines the different approach that should be used when building in Polaris and why. This approach doesn’t feel “natural” for Classic model builders but following it allows for better learning, quick and efficient model building as well as guidance on when and how to do optimization.

…..

We hope you enjoyed getting to know our CBEs and checking out their recommended articles and recordings in this month’s Community Member Spotlight! Share your thoughts in the comments below, and feel free to share your favorite Community articles!

Want to learn more about our Community Member Spotlight series? Check out this post.

Do you know someone in the Community who you think should be featured in a Community Member Spotlight? We want to hear about it — please nominate them here.

becky.leung

becky.leung

Share your data integration best practices — September 2025 Community Challenge

We’re excited to launch our September 2025 Community Challenge, focusing on one of the most critical aspects of Anaplan success: data integrations. Whether you’re just getting started with CloudWorks or Anaplan Connect, or you have built advanced orchestrations using APIs and Anaplan Data Orchestrator, integrations are at the heart of connecting Anaplan to the rest of your ecosystem.

This month’s Challenge is your chance to showcase how you move data in and out of Anaplan, share tips that have made your integrations run smoothly, and inspire others in the Community.

Challenge prompts

To help spark your ideas, here are some questions you might explore in your response.

Note: Please avoid using AI/ChatGPT when writing your Challenge response — it should come from your own expertise so the Community can learn from you!

Please select one to two prompts from the Beginner or Advanced categories below to answer!

Beginner focus

- How do you set up a simple import or export between Anaplan and a source system?

- What are your go-to tips for mapping flat files or leveraging saved views?

- Do you prefer CloudWorks or Anaplan Connect for your first integrations — and why?

Stretch goals (advanced concepts)

- How do you orchestrate multiple integrations together using Anaplan Data Orchestrator or scheduling tools?

- Have you used APIs to automate your flows? If so, what benefits did you gain?

- What error handling, monitoring, or logging practices keep your integrations reliable?

- Any advice for working with large files or optimizing performance?

Learnings

- In addition to sharing your tips, tell us about mistakes you’ve made and what you have learned from them — your experiences with data integrations can help fellow Community members succeed.

Bonus

- Share a diagram, workflow screenshot, or snippet of your integration script to illustrate your process.

How to participate

- The data integrations Best Practices Challenge kicks off today, September 9, and concludes on September 26.

- Post your response directly in this thread — whether it’s a detailed write-up, a quick list of tips, screenshots, or even a short video walkthrough. Pick one or two prompts from the beginner or advanced categories and let us know your responses! No matter your style, your perspective will help the Community grow.

- Check out tips shared by your fellow Community members.

What's in it for you?

- Recognition: Gain recognition as a data integration pro in the Community.

- Learn from your peers’ creative approaches and best practices.

- Earn a badge: As a thank you for your participation, everyone who shares their best practices will receive an exclusive Community Challenge badge. It’s a fun way to show off your contribution!

- Earn a shout-out in our upcoming event: on October 28, we’ll be hosting an event discussing data integration best practices in an upcoming ACE Spotlight event. Participants' responses will be highlighted at this event.

Ready to get started?

Share your data integration best practices below — whether you’re a beginner experimenting with your first CloudWorks schedule or an advanced builder orchestrating complex API workflows, your learnings and ideas will spark inspiration across the Community!