Before making business decisions based on PlanIQ forecast results, you should assess the expected performance of the forecast predictions in the real world. It’s important to evaluate forecast performance at the outset of your project as well as on an ongoing basis. Also consider that a range of factors unique to your business context affect which methods and metrics are best suited to your use case. We recommend evaluating several methods and metrics to gain a robust understanding of the forecast performance.

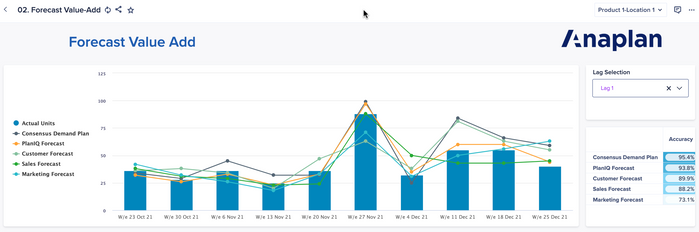

Example forecast accuracy dashboard

When should you assess forecast accuracy?

Throughout the process of building an operational PlanIQ forecast there are several occasions when you will likely need to assess forecast accuracy:

- Comparing the alternative models and selecting the best one

- Estimating the expected performance of the future forecast

- It is important to assess the risk of over- or underperformance, and to be able to plan for variance from the point forecast.

- Establishing accuracy metrics can also help identify if the forecast deviates from expected performance.

- Monitoring forecast performance over time and determining when to re-train with new data as old model becomes deprecated.

- Identifying potential areas with poor performance (e.g., times of year, regions, product groups, etc.) before deployment and take corrective measures

- Performance may improve with addition of related time series or metadata.

- You may detect extreme and unpredictable values that bias the model, and you can remove them by using the “exclude_value” option (for more on the exclude value function, see Anapedia).

Which factors impact forecast accuracy?

For business users, one of the main challenges to evaluating the performance and accuracy of time series forecasts is that it is extremely dependent on unique business context and use case.

There are many factors to consider as you establish a forecast evaluation process including:

- Seasonality and other cyclical influences

- Length of training period

- Forecast horizon duration

- Business cost of over- vs under- forecasting

- Sensitivity to user inputs and assumptions

- Periodicity of the dataset (monthly, weekly, daily, hourly, etc.)

- Industry and particular line of business

- Single-item forecast variable (SKU, Group, etc.) vs. multiple items

- Nature of item hierarchy, at which level of hierarchy are business decisions made

- Other factors specific to your industry or use case, such as business evaluation criteria that are impacted by forecast results. Consider that different stakeholders or business groups may be relying on the same forecast results for different purposes and those different groups may be interested in different metrics or over different time frames (short- vs long-term performance).

It is recommended that you note the above factors for your use case and align with business stakeholders on which metrics are most important for decision making.

Evaluating multiple approaches

Different approaches to forecast accuracy will be better suited to your specific use case. Many use cases require a combination of methods and metrics to provide accurate performance evaluation.

It is important to consider multiple approaches to calculating forecast accuracy when implementing a new forecasting project with PlanIQ. When appropriate metrics are identified, performance should be monitored on an ongoing basis. As shown below, we recommend benchmarking the PlanIQ forecast against any existing forecasts.

Example comparison of actuals vs PlanIQ and other forecasts