Building well performing models is a balance between the complexity and functionality of a model versus the number of concurrent users.

If you have seen software development / project management triangles, where scope, time and cost control quality, this will be familiar. It is a good way to show the constraints of any fixed system or process. In this case, an Anaplan model is our fixed system and the constraints are affecting the users experience of using your model...

USERS

The more users, then the greater the need for a fast performing model. The reason for this is how Anaplan protects data integrity by blocking other data writes (changes) while another is in progress; this means other model changes are queued behind the currently executing change, and this is perceived as a delay and poor performance.

Concurrency Examples:

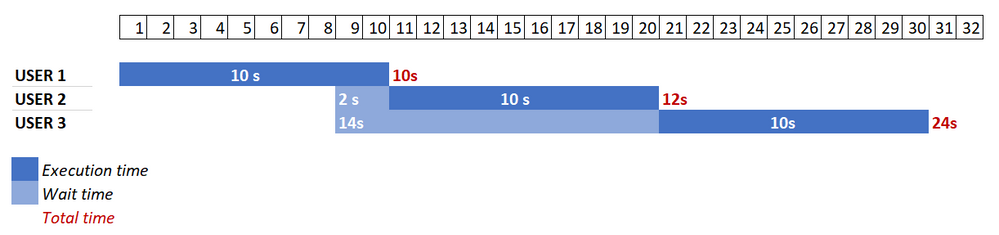

Example 1: If a calculation took 10s to complete and users were trying to make that change every 8s we would see this effect:

The second user has to wait 2 sec for the previous change to complete, and their change will take 12 seconds (2s wait and the 10s calculation). The 6th user in this example would then take twice as long to make the change, waiting a full 10s behind another delayed change.

Example 2: The issue is worse when we have multiple users (concurrency), here we see 2 users try to make the change at the same time (at the 8s mark):

User 2 is waiting 2s as before, but User 3 now has to wait for User 2's change to complete, a wait time of 14s, giving them an total time of 24s.

These are extreme examples, most Anaplan calculations are under 2s, but even relatively quick calculations can see queueing under very high user loads. It is vital that model optimization is done before you submit a model for concurrency testing, so that the blocking effect of writes is kept to a minimum. Depending on the results of the concurrency testing you may need to adjust the volume of users in the test to hit the desired target response time (performance) or adjust the target response times (user expectations).

If it is not possible to reduce the number of users then it would be necessary to reduce the complexity of the model.

If we keep the same complexity or functionality,

then as we add more users the performance decreases.

|

COMPLEXITY

When it comes to calculations in Anaplan, we encounter two main types of complexity. Firstly, a formula in an individual line item can be very complex. Performance can be poor if it contains too many terms, multiple combinations of functions, inefficient functions, lengthy conditional statements, unnecessary duplicates or repetitions. This is made worse when there are more dimensions applied than is required, or if the level applied in a composite hierarchy is too granular, or simply when summaries are applied needlessly. Each scenario performs the calculation over more cells than is needed.

The second type of complexity arises with convoluted calculation chains. A calculation consisting of thousands of line items in a long reference chain can be slow, even if all the calculations within that chain are relatively small, especially if many of those are summary calculations.

Complexity can also come in the form of user initiated actions and processes. 5.01-03 Keep User actions (in general) to a minimum A complex process of 10 or more actions will take a significant time to complete, even if all the calculations triggered are of a short duration. If you have to run user actions then it is recommended to batch and schedule them outside of working hours where possible, to avoid impacting other user activity.

To reduce complexity we must optimize the model. That can be in optimizing the calculations, reducing the number of calculations or reducing the scope of the user journey. Optimizing calculations is the first place to begin as the intention is to keep the existing functionality by simplifying or reducing the calculations that are being done.

Resources:

If optimizing calculations doesn't yield enough performance gains to balance against the number of users, then reducing the volume of calculations may be needed.

This could be in decoupling downstream modules from the calculations. An example is breaking references between calculation modules and output modules, replacing with an import process ran either by an admin or scheduled, depending on how frequently the reporting data needs to be seen.

A better solution may be in splitting the models by business process. Understanding what needs to be done for that single business process, and setting up another model for a different process (think HR vs FP&A). If you need to retain the functionality/complexity then a possible solution is to split the data across models or duplicate the model and split users between them. Some dimensions could be split over different models such as Accounts or Regions. Splitting the model does have consequences, you now need to have actions to bring data into consolidated model and it would not have real-time updating. ALM does allow for easy splitting of models in that a number of similar spoke models can be updated from a single dev model.

Splitting models to reduce calculation volumes is an extreme scenario, it is much more likely that current functionality can be optimized using best practice and Planual principals. Can the scope of calculations be reduced by reducing the number of versions or scenarios or by introducing Time Ranges for specific modules? Do the calculations need past years? Is the granularity of the data appropriate, are you calculating in Days or Weeks when Months would suffice?

Ways to reduce complexity:

Optimizing formulas

- Simplifying calculations

- Reducing repetition

- Reducing dimensionality

- Remove summaries

- Optimizing the User Journey / Business Process

- Spreading / Scheduling User access

- Splitting functionality across multiple models

- Splitting Data or Users across multiple models

If we keep the same number of users and decrease the complexity (optimize),

we will see an improvement in performance. |

PERFORMANCE

The desired performance is therefore a balance of how many users we have against how complex their user journey is through the model. It is vital that we evaluate the baseline performance of the model whilst developing so that we can be confident in the scalability of the model to handle the desired number of users. You can test models with our Model Concurrency Testing services, as recommended in the Anaplan Way. Before testing you may need to optimize to reduce complexity so that you know the baseline durations are well below the target times set out in the test specification. Essentially, you have to have performance in reserve so that as you increase the number of users you still meet the performance targets.

Optimization of models is how we reduce complexity but we should be building with performance and scalability in mind, this is where the PLANS - This is how we model methodology guides you. Following the Planual best practice and other resources here on Community will help keep complexity to a minimum so that you can achieve the desired performance levels demanded by your users.

Performance is a balance of complexity and user concurrency,

and reducing either of these can improve it. |

Author Mark Warren.