Author: Dmitri Tverdomed is a Data Architect and Director at Zooss Consulting.

Data integration isn’t just a technical exercise anymore — it’s foundational to a business’ ability to move quickly, make informed decisions, and scale sustainably. Whether you’re integrating Anaplan with upstream data platforms or pushing model outputs downstream to ERP systems, doing it well can be the difference between delivering insight or confusion.

I regularly work with organizations navigating all levels of integration maturity, and across the board, the same questions arise: How do we get started? What tools and patterns are best for us? What are the pitfalls to avoid?

This article will answer those questions and walk through key integration methods and share best practices that help you to get the most out of your Anaplan and broader data stack.

First, ask the right questions

Before touching a line of code or configuring a tool, ask the following:

- Who’s owning the integrations — business or IT? It’s likely to be a hybrid model where Anaplan CoE on the business side and the technology counterparts all play a role

. - What are the main data sources (on-prem, SaaS, cloud) and data domains (master data, transactions, config, reference)?

- Is a data platform like a data warehouse or lakehouse already in place? How much of the required data can be sourced from such data platform(s)?

- What level of automation is needed? Is real-time sync required upstream or downstream from Anaplan?

- How much historical data needs to be loaded, and at what granularity?

- Are there existing ETL/ELT tools in the organization?

Getting alignment on these up front helps shape the integration design and operating model from the outset — and saves a lot of rework later.

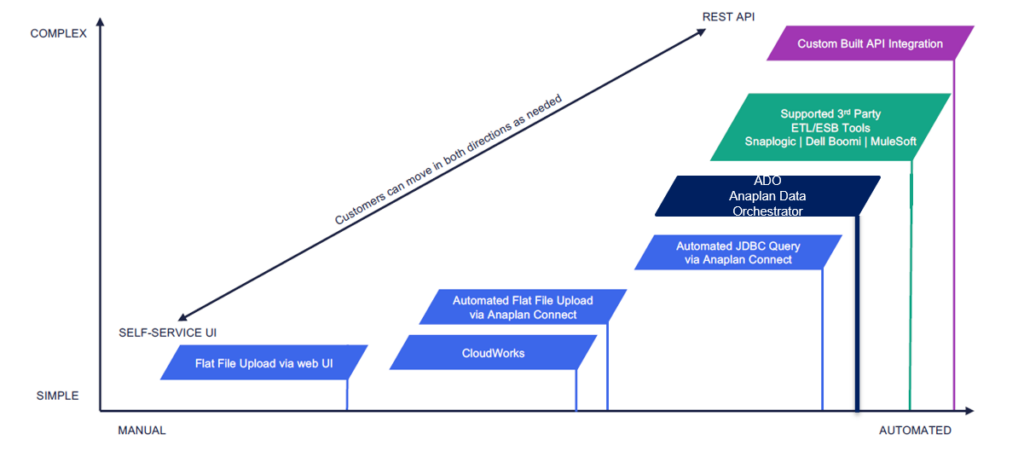

Core methods of data integration with Anaplan

Let’s explore some of the most common options:

- Flat file upload via Anaplan UI

Simple but effective, this method lets users manually upload CSV or TXT files via Anaplan’s interface. It’s a quick win for small-scale or ad hoc imports, but not suited to high-volume or automated pipelines. - CloudWorks

This is Anaplan’s native scheduling tool, integrating with cloud providers like AWS S3, Azure Blob, and Google BigQuery. It’s ideal for regular flat file transfers, but may require external scripting to manage file pre and post processing, such as renaming and archiving. - Anaplan Connect

This on-prem command-line tool is great for scripted automation of flat file integrations and supports SQL queries via JDBC. It requires Java run-time and scripting skills in Windows or Unix shell scripting, but offers powerful control over import/export processes.

- ETL/ELT tools with connectors

Tools like Informatica, Boomi, SnapLogic, Talend, and MuleSoft provide out-of-the-box connectors for Anaplan and upstream systems. These are great for organizations that already have an established ETL footprint and want to plug Anaplan into the broader ecosystem.

- Anaplan Data Orchestrator (ADO)

Anaplan’s newest data management solution allows no-code connections to systems like SAP (via oData), Snowflake, SQL Server and cloud storage. It also replaces the traditional Data Hub by enabling data storage external to Anaplan workspaces. ADO is powerful tool to connect, transform, store and then distribute the data to your Anaplan spoke models, but comes with licensing considerations and a maturing roadmap of features. - Integration APIs

For custom use cases, APIs are the most flexible option. Anaplan offers:

- Bulk APIs for batch operations for impart and exports.

- Transactional APIs for real-time updates.

Anaplan Integration APIs are ideal for organizations looking to build tailored, scalable integrations with full control over schedules, dependencies, and error handling.

Data integrations options:

Data integration best practices

Regardless of method, strong data integration depends on smart design. Here are some practical tips:

- Start with a data platform

Where possible, integrate Anaplan with a centralized data platform rather than pulling from source systems directly. This simplifies data governance and ensures consistent quality, naming, and formats across the enterprise. - Use unique keys wisely

If your data doesn’t have a clean primary key, consider creating a concatenated field to ensure each row is truly unique. This is essential for accurate synchronization and conformity across your connected planning models.

- Define load types upfront

- Delta loads update only changed records.

- Full loads overwrite entire datasets.

- Snapshot loads give you a point-in-time view.

Define when and how each is used, especially for history and catch-up loads.

- Document interface specs clearly

For every integration, maintain documentation that includes technical names, data types, formatting rules (especially for dates), precision, and mandatory fields. This reduces onboarding time and supports better debugging down the line. - Build for reconciliation and assurance

Include row counts, checksums, or even simple “did this field load correctly?” rules. Load assurance metrics should be visible not just to IT, but also to the business users impacted by delays or errors.

- Make logs accessible

Error logs should be more than just a backend record—they should be easy to access and understand by both support teams and key stakeholders. Automate alerts where possible to flag failures early.

- Define your operating model

Integration isn’t just about the tech. Who monitors logs? Who fixes errors? Define roles and responsibilities clearly, particularly when handing over support from implementation to BAU. - Getting started with data integration

For those new to Anaplan integrations or looking to level up, there are plenty of resources to explore:

Final thoughts

There’s no one-size-fits-all solution when it comes to data integration, but there is a right way to approach it: with clarity, discipline, and a willingness to collaborate across business and IT. Whether you’re just uploading flat files or orchestrating complex flows with APIs, investing in integration best practice pays off.

Questions? Leave a comment!