One of the most common questions I get asked is when to use native versions versus using a general list to replicate versions. As is often the case, the answer is: it depends. Let me run through some key areas to help you with the decision.

Functionality

What functionality do you need? We always advocate using native Anaplan functionality where possible, if that provides the most logical, simple, and understandable formulas and structures.

So, what built-in functionality does using versions give us?

- Switchover functionality: automatic rolling forecasts using the latest actuals

- Version-specific functions: CURRENTVERSION(), PREVIOUSVERSION(), NEXTVERSION(), ISCURRENTVERSION(), ISACTUALVERSION()

- Formula scope: to allow the formula to only apply to actual version, all except actual version, current version

- Version formula: allowing specific formulas for different versions. This uses line item subsets and is only applicable for numeric formatted line items.

- Edit to/from dates: to allow read/write access to ranges of dates within a version

- Bulk copy: copy from one version to another for the whole model (although this applies to all lists, too)

- Formulas: within the versions list (although we don’t recommend this)

- Compare: lets you compare data values against a base case. You can choose what to compare the data against—a different version, a previous version of the current module, a list, or a time period.

- Structural data: versions are synchronized to deployed models as part of Application Lifecycle Management (ALM)

You can also use versions for iterative calculations. Each version is contained in its own calculation block, so there is a built-in intelligence of dependencies for previous and next (in a similar way to time) so you can use the formulas from above to iterate through the calculation if needed. If you need to use versions in this way, it is best practice to set the Actual? flag to true for the first version, and the Current? flag to true for the last version.

When referencing a source module with versions as a dimension in a target module without versions, the “current” version is automatically returned. This is why you should set the current version as the last version when using the iterative calculation technique described above.

Finally, an additional benefit to using switchover with versions is a reduction in cell count for the line item(s) using the switchover functionality. The cells for the “actual” periods are not counted as part of the calculation thus reducing the line item size and memory calculations needed.

Limitations

There is a lot of functionality that versions bring, but there are also some limitations (mostly due to the block structure, which I will explain later):

- You cannot set versions as a format for line items.

- Linked to the above, you cannot use LOOKUP or SUM when referencing a version.

- Similarly, you cannot SUM out of a module by version (as per time, due to the block constructs).

- You cannot create version subsets.

- Versions are structural as so cannot be deleted in development models without affecting production models (as part of ALM). This can potentially lead to larger than desired development models.

In summary, versions bring functionality that lists do not have, but there are limitations that do not apply to general lists.

Performance

The first aspect of PLANS is "performance", so we shouldn’t neglect that. Probably the biggest consideration here will be the number of versions. This is where the lack of subsets can have a big impact on size and performance. There are no parent levels within versions, but adding versions to modules with other large dimensions (and timescales) will add to the aggregation points and memory blocks needed. Remembering D.I.S.C.O., if all of the calculations and data points do not need all of the versions, then there will be a lot of extra calculations, memory, and size being consumed.

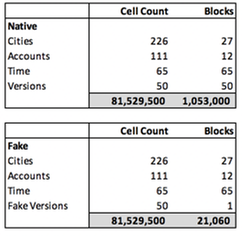

As with most aspects of PLANS and the Planual, we’ve done some testing to give you the “lab” results to quantify the impact. We tested the calculation volume and time for a simple model with native versions and a second model replacing versions with a general list of “fake” versions.

Test model details

Timescale: 1 year by week with a monthly and full-year total.

General Lists:

- Region: 200 Cities>20 Countries>5 Regions (with a top level)

- Channel: 100 Accounts>10 Channels (with a top level)

Versions: 50

Modules: City by Account by time by version/fake version

Line Item Formula:

- Data

- Cumulate (Data) – summaries on

Results

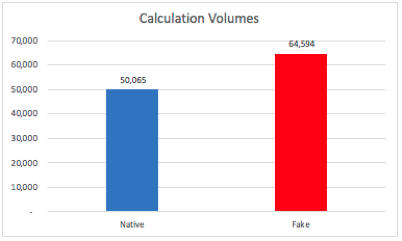

This shows how big the calculation is or how much work the engine needs to do. The results are quite similar, with native versions having a smaller calculation volume overall.

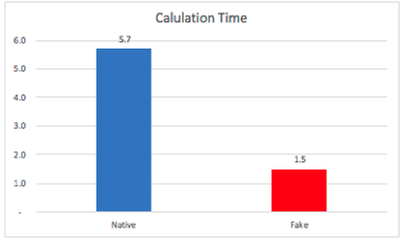

However, when we look at how long it took to perform the calculations, the results are very different.

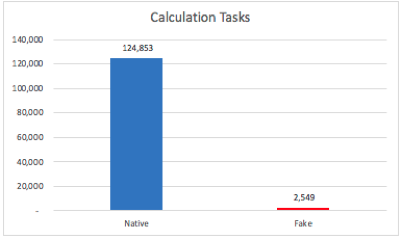

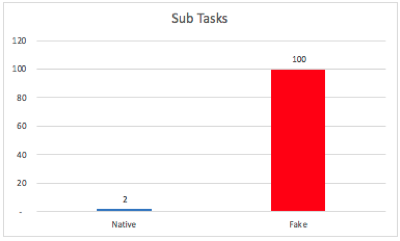

Why is that? When we look more deeply and analyze how many data tasks the engine processed, the results show clearly why the native versions model took longer to fully calculate on model opening.

The native versions model has nearly 50x more tasks to perform than the fake versions model. What is causing there to be such a big difference?

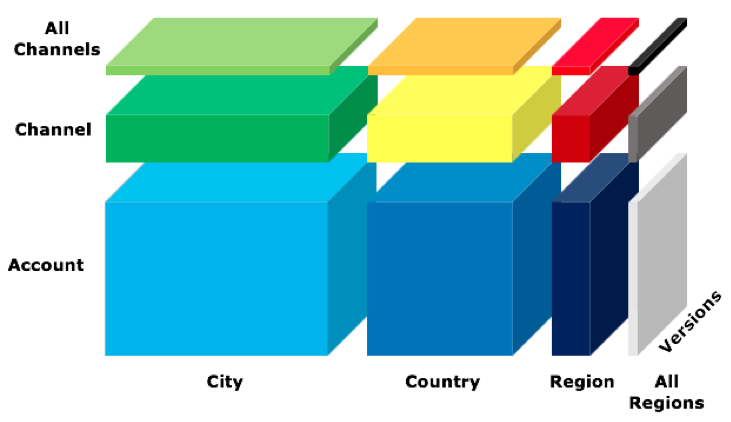

The answer lies in the block structure of the calculation engine. Each version (and time) member is contained in its own block. When we break down the other hierarchies, they also split into blocks. The lowest level of City and Account form the biggest block, and there are separate blocks for the aggregated levels at Country, Region, and Channel for the two hierarchies and finally the two top levels of the hierarchies.

The blocks are made up as follows:

- Cities: 27 = 1 for the lowest level of City, 20 for Country, 5 for Region, 1 for top level

- Accounts: 12 = 1 for Accounts, 10 for Channel, 1 for top level

- Time: 65 = 52 weeks, 12 months and a full year

- Versions: = 50

The total number of blocks is the product of all of the blocks from the lists, time, and version blocks.

You can see that in the fake versions model—because there are no versions—there is only one block for fake versions (no parents), so the block count is 50x smaller; the same multiplier as the number of tasks.

This multiplier is also backed up when we look at the number of sub tasks created as part of the calculation. A large block can be split into smaller tasks that run in parallel over many CPU threads. The smaller blocks for the versions can only be split once. This is a factor in the efficiency of the calculation.

Although the volume of calculation for the fake version model is slightly larger, it can be executed more efficiently across the processing threads of the engine (this currently stands at 144 as the maximum threads on the hardware). Think of the tasks as boxes and only one box can be moved at one time. It's much quicker for 144 people to move 2,549 boxes than 124,853 boxes, even though the combined weight of the boxes is the same.

Decision time

So how do we decide? It depends on how critical the built-in version functionality is. It is possible, in almost all cases, to replicate the functionality using lists, Boolean flags and alternative techniques such as Dynamic Cell Access. These will require more modeling and complexity. Also, one needs to consider just how many versions are needed. If only a small number of versions are required (e.g. less than five), then the performance impacts described above will be minimum and the functionality benefits and simplicity may sway you towards native versions. However, a large number of versions can have a detrimental impact on performance, particularly if used in conjunction with long timescales and deep hierarchies. In this case, a fake version list would be preferable. Finally, one must consider the maintenance and updates of the versions (specifically where ALM is in use). If versions are added regularly and the number of versions starts to increase, this will increase the size of the development model. However, versions are not likely to be the largest list within the model and it is better to cut down other, larger production lists to keep the development model small.

So, in conclusion, the decision will rest on balancing the simplicity of built-in functionality versus complexity, efficiency, and performance. With this in mind, the answer could well be a mix of both. One of Anaplan’s big strengths is the ability to use appropriate and different structures in the same model. It is also a fundamental element of Anaplan's best practice (D.I.S.C.O.), so using both native and fake versions within a model is fine.

As with most Anaplan modeling problems, there is no one right or wrong answer, but I hope this has helped give you some guidance and insight into some of the inner workings of an Anaplan Hyperblock engine.

Author David Smith.

Contributing author Mark Warren.